Controlling Cloud, AI, and LLM costs

Cloud costs continue to explode as companies move towards Artificial Intelligence (AI) and Large Lange Modeling (LLM) projects. The cost of cloud computing is listed in cents or even fractions of a cent, this can add up to invoices well over $10,000-$50,000 each month. Every month it seems CFOs begin to ask what the value of Cloud Computing is when the bills arrive. With some infrastructure and governance changes CIOs and CTOs can generate cost savings.

Validate that development clusters are not active when not used

Turn off the access and use of cloud resources when they are not used. Even it is only 8 hours a day that can generate significant savings. It is rare for anyone to use a development cluster for more than 12 hours a day for a sustained period. There are 168 hours in a week but if you and your team work only a quarter of those hours, it’s possible to save 75% on the cost of running your development clusters. Write scripts that run in the background and manage it all for you.Only turn on services that are needed

Many cloud applications are constellations of machines. Instead of firing up all the machines, the CIO can employ a smart set of functions to imitate machines that are not the focus of the daily work. Mock instances of microservices can significantly shrink what is required to test new code. These functions (routines) can often configure these instances to offer more effective debugging by tracking all the data that comes their way.Limit local disk storage

Build lightweight versions of your servers that don’t need much local storage. Most cloud (especially LLM) instances come with standard disks or persistent storage with default sizes. Limit how much disk space is assigned. Instead of choosing the default, try to get by with as little as possible. This means clearing caches or deleting local copies of data after it’s safely stored in a database or object storage. Write scripts that run in the background and manage it all for you.Monitor resource usage for cloud applications

Manage demand peaks and reduce used cloud resources for slack periods. Clouds don’t always make it easy to shrink all the resources on disk. If your disks grow, they can be hard to shrink. By monitoring these machines closely, you can ensure that your cloud instances consume only as much as they need and no more. Use tools like Corner Bowl Software tools.Utilize Cold Storage (slower access to physical storage)

Some cloud providers charge a very low price but only if you accept a latency of several hours or more. It makes sense to carefully migrate cold data to these cheaper locations. In some cases, security could be another argument for choosing this option. Scaleway boasts of using a former nuclear fallout shelter to physically protect data.Utilize alternative less expensive providers

Some cloud providers offer lower prices for similar services. Evaluate secondary providers based on prices and services. These providers can compete on access latency offering faster “hot storage” response times. Of course, you’ll still have to wait for your queries to travel over the general Internet instead of just inside one data center, but the difference can still be significant. Affordable providers also sometimes offer competitive terms for data access.Use spot (surplus) machines of cloud providers

Some cloud providers run auctions on spare machines. If a CIO you can run tasks without firm deadlines when the spot price is low, spot machines are great for background work like generating monthly reports. However, spot machines can be shut down without much warning. Applications that run on spot machines should not be critical.Look at the value of long-term contract commitments

Cloud providers often offer significant discounts for organizations that make a long-term commitment to using hardware. They can be ideal when you know just how much you’ll need for the next few years. The downside is that the commitment locks in both sides of the deal. You can’t just shut down machines in slack times or when a project is canceled.Implement Service Level Agreements (SLAs)

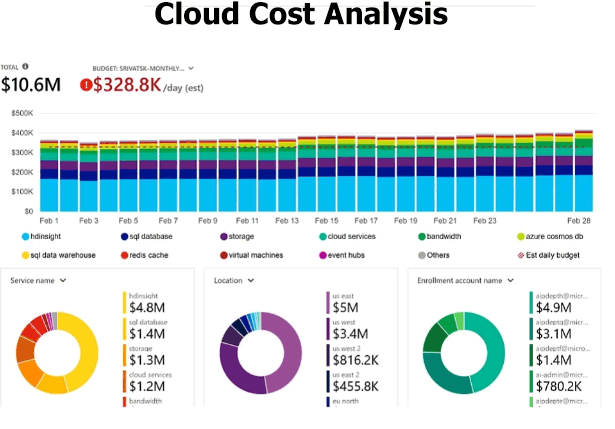

The best solution is an SLA that ties costs to performance. Broadcast the spending data to everyone on the team and the enterprise operations. Let users drill down into the numbers and see just where the cash is going. A good dashboard that breaks down cloud costs may just spark an idea about where to cut back.Minimize dedicated servers number of Cloud Service Providers used in the enterprise

The cloud computing revolution is centralizing resources and then making it easy for users to buy only as much as they need. The logical extreme of this is billing by each transaction. The non-dedicated server architecture is a good example of saving money by buying only what you need. Businesses and development teams with skunk work projects or proofs of concept love these options because they can keep computing costs quite low until the demand arrives.Manage what data to store in the cloud and locally Programmers like to keep data around in case they might ever need it again. Tossing personal data aside not only saves storage fees but limits the danger of releasing personally identifiable information. Stop keeping extra log files or backups of data that you’ll never use again. Many modern browsers make it possible to store data in object storage or even a basic version of a classic database. This can also be used to save storage costs. If the user wants to save endless drafts, well, maybe they can pay for it themselves.

Change cloud providers if their prices escalate

While many cloud providers charge the same no matter where you store your data, some are starting to change the price tag based on location. AWS, for instance, charges a higher price per gigabyte than in Northern California. China recently cut its prices in offshore data centers much more than the onshore ones. Location matters quite a bit in these examples. It is not easy to take advantage of these cost savings for large blocks of data. Some cloud providers have exfiltration charges for moving data between regions. Still, it’s a good idea to shop around when setting up new programs.Offload cold data – consider DR/BC implications

Cutting back on some services will save money, but the best way to save cash is to go cold turkey. Nothing is stops a CIO from dumping their data into a hard disk on a desktop or down the hall in a local data center. Of course, the CIO only gets that price in return for taking on all the responsibility and the cost of electricity and DR/BC implications. It won’t make sense for your serious workloads, but the savings for not-so-important tasks like very cold backup data can be significant. You might also note some advantages in cases where compliance rules favor having physical control of the data.Order Cloud Outsourcing Download Selected Pages